1 Chapter 4 Data-Level Parallelism in Vector, SIMD, and GPU Architectures Computer Architecture A Quantitative Approach, Fifth Edition. - ppt download

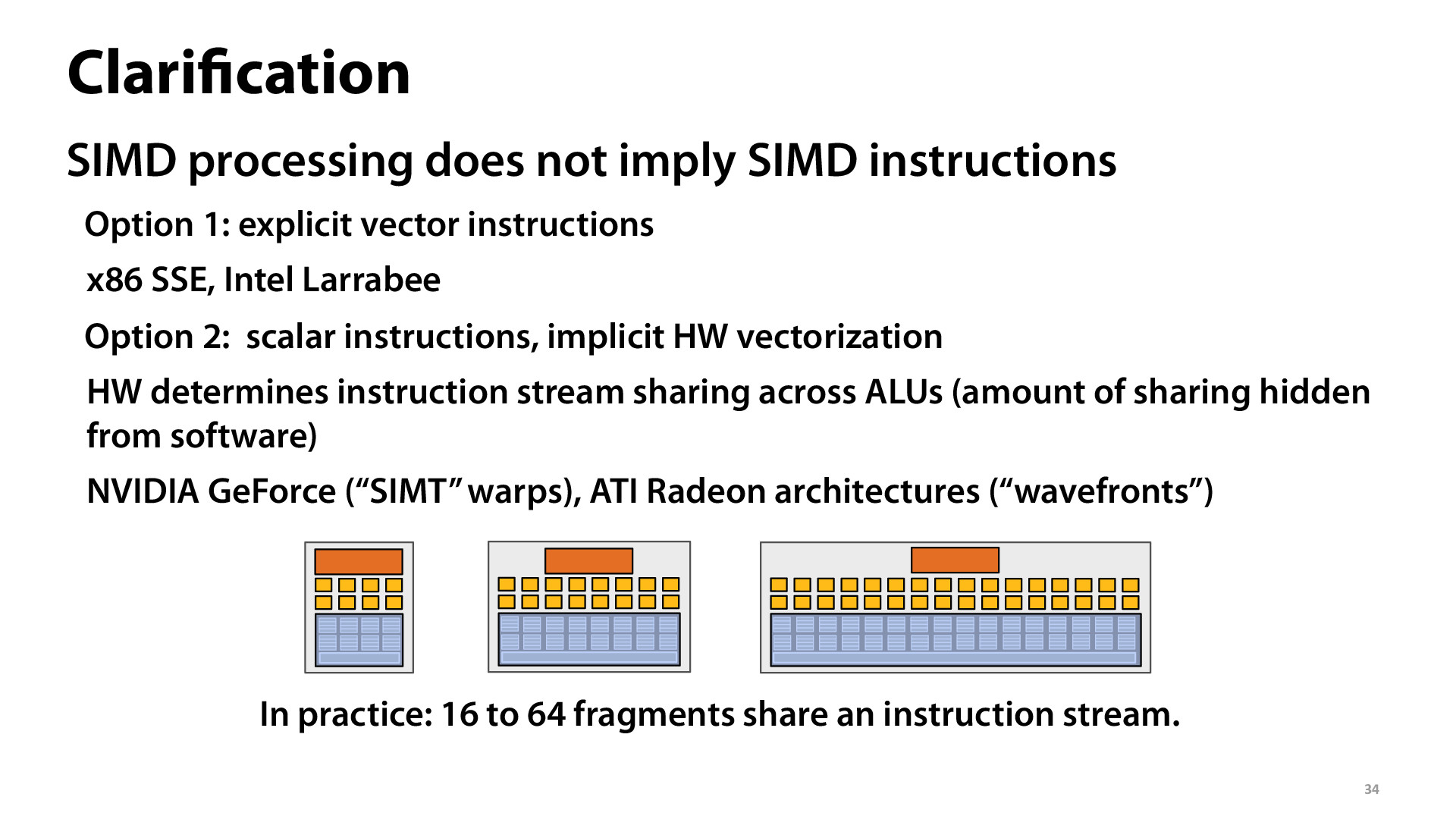

Concepts Introduced in Chapter 4 SIMD Advantages Vector Architectures Extending RISC-V to Support Vector Operations (RV64V)

Many SIMDs Make One Compute Unit - AMD's Graphics Core Next Preview: AMD's New GPU, Architected For Compute

Appendix C: The concept of GPU compiler — Tutorial: Creating an LLVM Backend for the Cpu0 Architecture

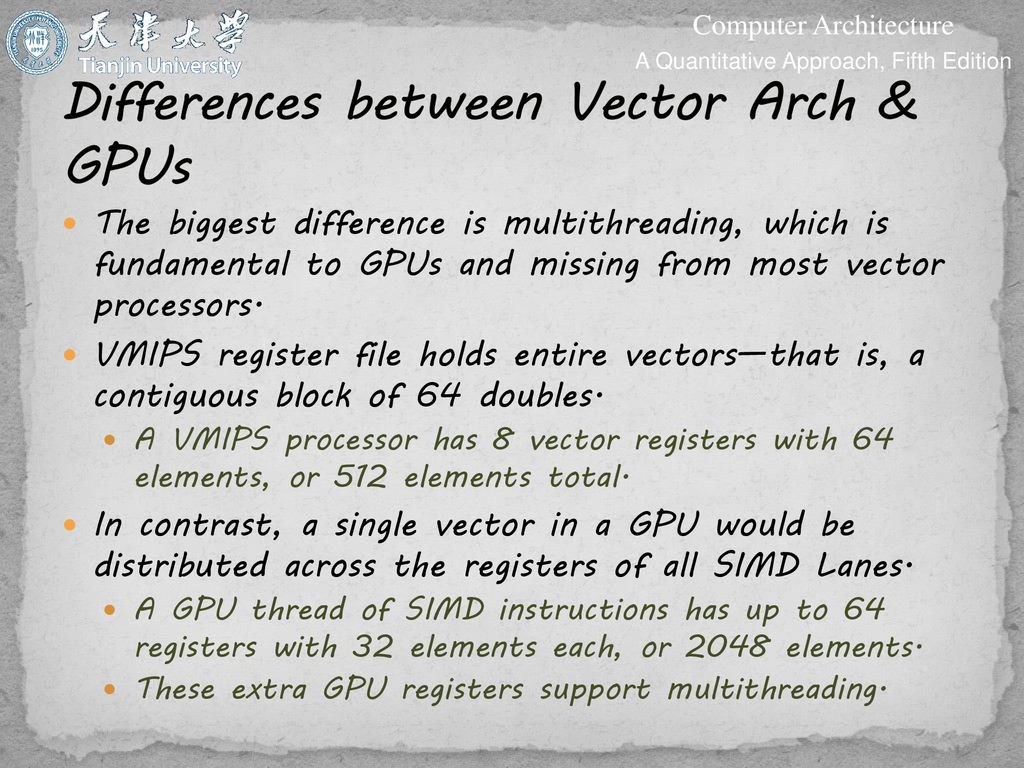

Chapter 4 Data-Level Parallelism in Vector, SIMD, and GPU Architectures Topic 22 Similarities & Differences between Vector Arch & GPUs Prof. Zhang Gang. - ppt download

Appendix C: The concept of GPU compiler — Tutorial: Creating an LLVM Backend for the Cpu0 Architecture